Recurrent State Representation Learning with Robotic Priors

Motivation

In der Robotik sind die sensorischen Eingaben hochdimensional, aber nur ein kleiner Unterraum ist wichtig, um die Aktionen eines Roboters zu steuern. In einer früheren Arbeit von Jonschkowski und Brock aus dem Jahr 2015 wurde eine unüberwachte Lernmethode vorgestellt, die eine niedrigdimensionale Repräsentation mithilfe von Roboterprioritäten extrahiert. Diese Roboterprioritäten kodieren Wissen über die physikalische Welt in einer Verlustfunktion. Ihre und andere Methoden zum Erlernen einer solchen Repräsentation basieren jedoch auf einem Marko vianischen Beobachtungsraum.

Beschreibung der Arbeit

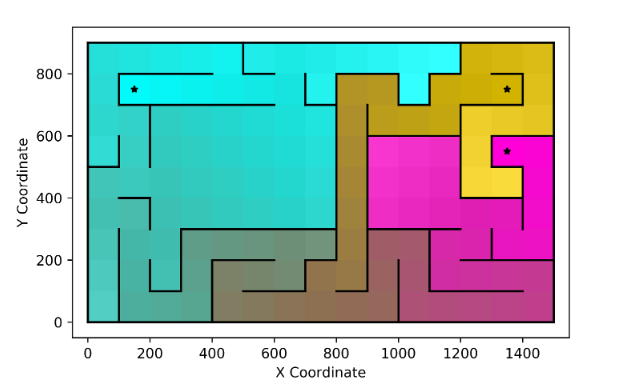

Wir erweitern die Idee der Roboterprioritäten auf nicht markovianische Beobachtungsräume. Zu diesem Zweck trainieren wir ein rekurrentes neuronales Netz auf Trajektorien, so dass das Netz lernt, vergangene Informationen in seinem verborgenen Zustand zu kodieren. Auf diese Weise können wir einen markovianischen Zustandsraum erlernen. Um dieses Netzwerk zu trainieren, kombinieren und modifizieren wir bestehende Roboterprioritäten, um in nicht markovianischen Umgebungen zu arbeiten. Wir testen unsere Methode in einer 3D-Labyrinthumgebung. Um die Qualität der erlernten Zustandsrepräsentation zu bewerten, führen wir ein Valida tionsnetzwerk ein, das die gelernten Zustände auf die Grundwahrheit abbildet.

Ergebnisse

Unter Verwendung des neuen Validierungsnetzwerks zeigen wir, dass der gelernte Zustandsraum sowohl Positions- als auch Strukturinformationen der Umgebung enthält. Außerdem verwenden wir Verstärkungslernen und zeigen, dass der gelernte Zustandsraum ausreicht, um eine Labyrinth-Navigationsaufgabe zu lösen.

© RBO

© RBO