The Physical Exploration Challenge

Robots Learning to Discover, Actuate and Explore Degrees of Freedom in the World

Contact Persons

Project Leaders:

Oliver Brock, Marc Toussaint

Researchers:

Manuel Baum, Johannes Kulick, Peter Englert, Sebastian Höfer, Roberto Martin Martin

Administration:

Elizabeth Ball, Carola Stahl

Associates:

Shlomo Zilberstein

Summary

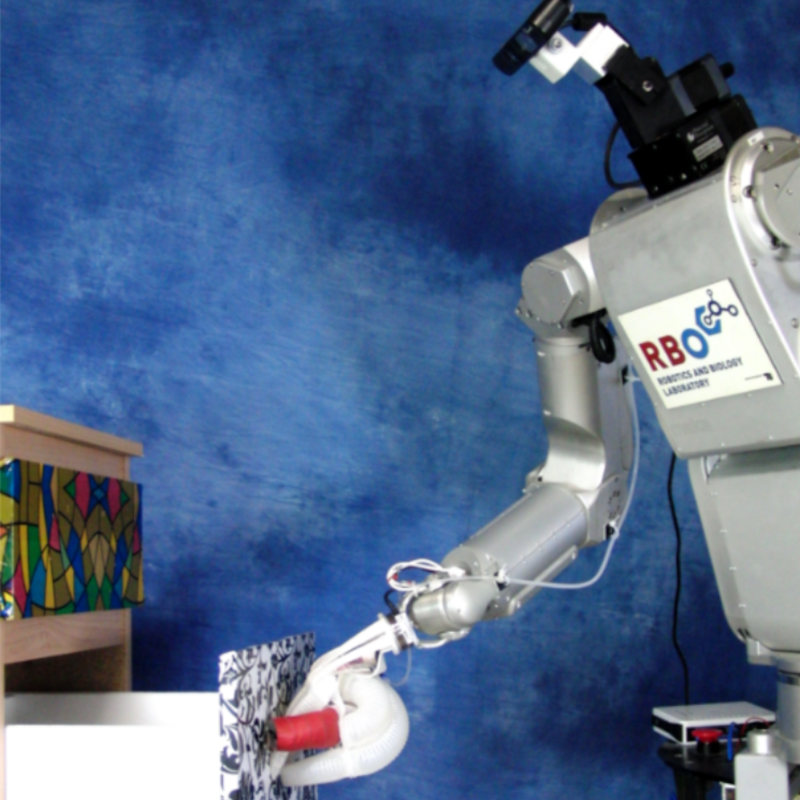

This project addresses a fundamental challenge in the intersection of machine learning and robotics. The machine learning community has developed formal methods to generate behaviour for agents that learn from their own actions. However, several fundamental questions are raised when trying to realize such behaviour on real-world robotics systems that shall learn to perceive, actuate and explore degrees of freedom (DoF) in the world.

These questions pertain to basic theoretical aspects as well as the tight dependencies between exploration strategies and the perception and motor skills used to realize them. The goal of this project is to equip real-world robotic systems with one of the most interesting aspects of intelligence: an internal drive to learn, i.e., the ability to organize their behavior so as to maximize learning progress towards an objective.

If successful, we believe that this will make a transitional change in the way such systems behave, in their autonomy of learning, in the way they ground acquired knowledge, and eventually also in the way they will interact with humans and play their part in application in industry and in the private sector. To examine the interplay between these building blocks, we built a mechanical device we call lock-box. This device features multiple mechanical joints that can lock each other. It is a rich testbed to research how motor and perceptual skills can be used for exploration of a complex physical environment.

The robot has to cope with a very high degree of uncertainty in this domain. If it tried to move an object and that object did not move, then there can be multiple explanations for this: the object can be rigidly attached to the environment, the object can be part of a mechanism that only temporarily locked, or the robot could have moved the mechanism but did not perform the right action. We want to find out how a robot can resolve this issue.

We already have some answers to the questions that surfaced on the way to our goal. Interactive Perception can be used to detect joints between movable objects. Coupled Learning of Action Parameters and Forward Models for Manipulation, can give the robot the ability to learn models for its own actions and the kinematic structure of its environment simultaneously.

Funding

The project is funded by DFG's Priority Programme 1527 Autonomous Learning.